- Developing real-time EMS triage pipeline using multimodal AI for trauma prediction.

- Collaborating with surgeons on AI-assisted decision support systems.

Chi-Sheng (Michael) Chen 陳麒升

I am a Research Fellow at Harvard Medical School and Beth Israel Deaconess Medical Center (BIDMC), and Co-founder of Omnis Labs, an AI-driven DeFi liquidity positioning protocol.

My research focuses on multimodal AI systems for medical/clinical application, spanning clinical biosignal processing, speech and language understanding, and quantum machine learning. Previously, I was an AI Trainer at OpenAI, CTO of Neuro Industry, Inc. and a Digital IC Design Engineer at MediaTek.

I hold an M.S. in Computer Science and Bioinformatics from National Taiwan University and dual bachelor's degrees (B.Eng. & B.S.) in Physics and Electrical Engineering from National Chiao Tung University (now National Yang Ming Chiao Tung University).

I am seeking PhD opportunities in clinical AI and/or quantum machine learning, starting Fall 2026.

You can contact me at: cchen34 [at] bidmc.harvard.edu | m50816m50816 [at] gmail.com

News

- 🚩 Mar 2026: Two posters presented at NVIDIA GTC 2026 — "GPU-Accelerated Chinese Brain-to-Text Interface Powered by NVIDIA H100 80GB" and "Accessible Quantum Reinforcement Learning for Finance: Benchmarking on NVIDIA RTX GPUs."

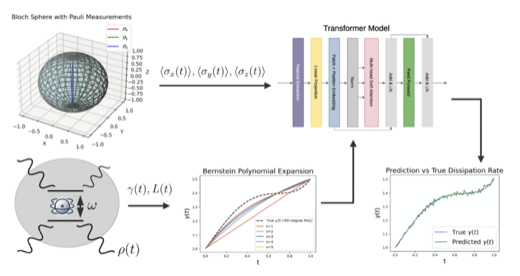

- 🚩 Jan 2026: Two papers accepted at IEEE ICASSP 2026 — "Quantum Reinforcement Learning-Guided Diffusion Model for Image Synthesis" and "Quantum and Classical Machine Learning in Decentralized Finance."

- 🚩 Aug 2025: Two papers accepted at IEEE GLOBECOM Workshop 2025 — "Q-DPTS" and "Benchmarking Quantum and Classical Sequential Models."

- 🚩 Jun 2025: Paper accepted at IEEE QCE 2025 — "Quantum Reinforcement Learning Trading Agent for Sector Rotation."

- 🚩 May 2025: Paper accepted at IEEE CIBCB 2025 — "Enhancing Clinical Decision-Making."

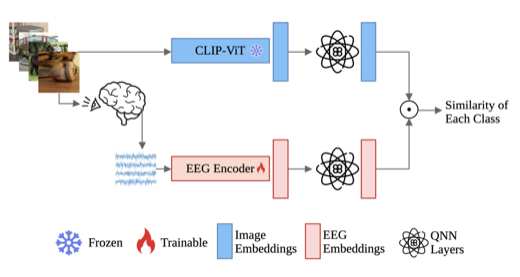

- 🚩 Jan 2025: Paper accepted at IEEE ICASSP 2025 — "Quantum Multimodal Contrastive Learning Framework."

Research Vision

I aim to build clinically deployable AI systems that bridge quantum computing, multimodal learning, and emergency medicine. Modern clinical environments generate rich, heterogeneous signals — EEG, audio, imaging, text — yet real-time decision support remains fragmented. My work addresses this gap through two complementary directions: (1) designing hybrid quantum-classical architectures that capture complex temporal dependencies in biosignals, and (2) engineering end-to-end multimodal pipelines that integrate speech, language, and physiological data for trauma triage and psychiatric treatment prediction. A core principle of my research is clinical translation: my EEG-based models are already serving real patients at the Precision Depression Intervention Center in Taipei. Beyond healthcare, my time-series methods have been validated in DeFi market-making, achieving 50%–120% base fee APR in production.

Selected Publications

Featured Projects

Research

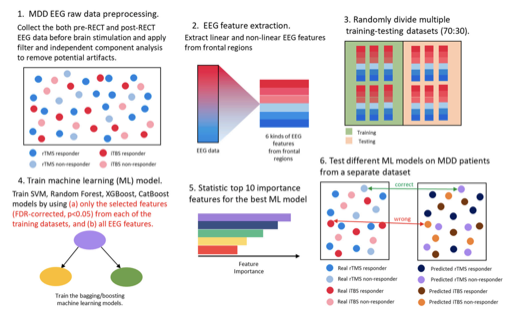

Clinical AI & Biosignal Processing

Developing AI systems for clinical neuroscience and emergency medicine.

My EEG-based depression treatment prediction models have been Clinically Deployed at the

Precision Depression Intervention Center (PreDIC)

at Taipei Veterans General Hospital, serving real outpatient patients.

At Harvard/BIDMC, I am building real-time EMS triage pipelines

Speech & Language Processing for Healthcare

Building speech and natural language processing systems for clinical settings, including EMS audio transcription, automated clinical documentation, and emergency page generation for trauma prediction workflows.

Quantum Machine Learning

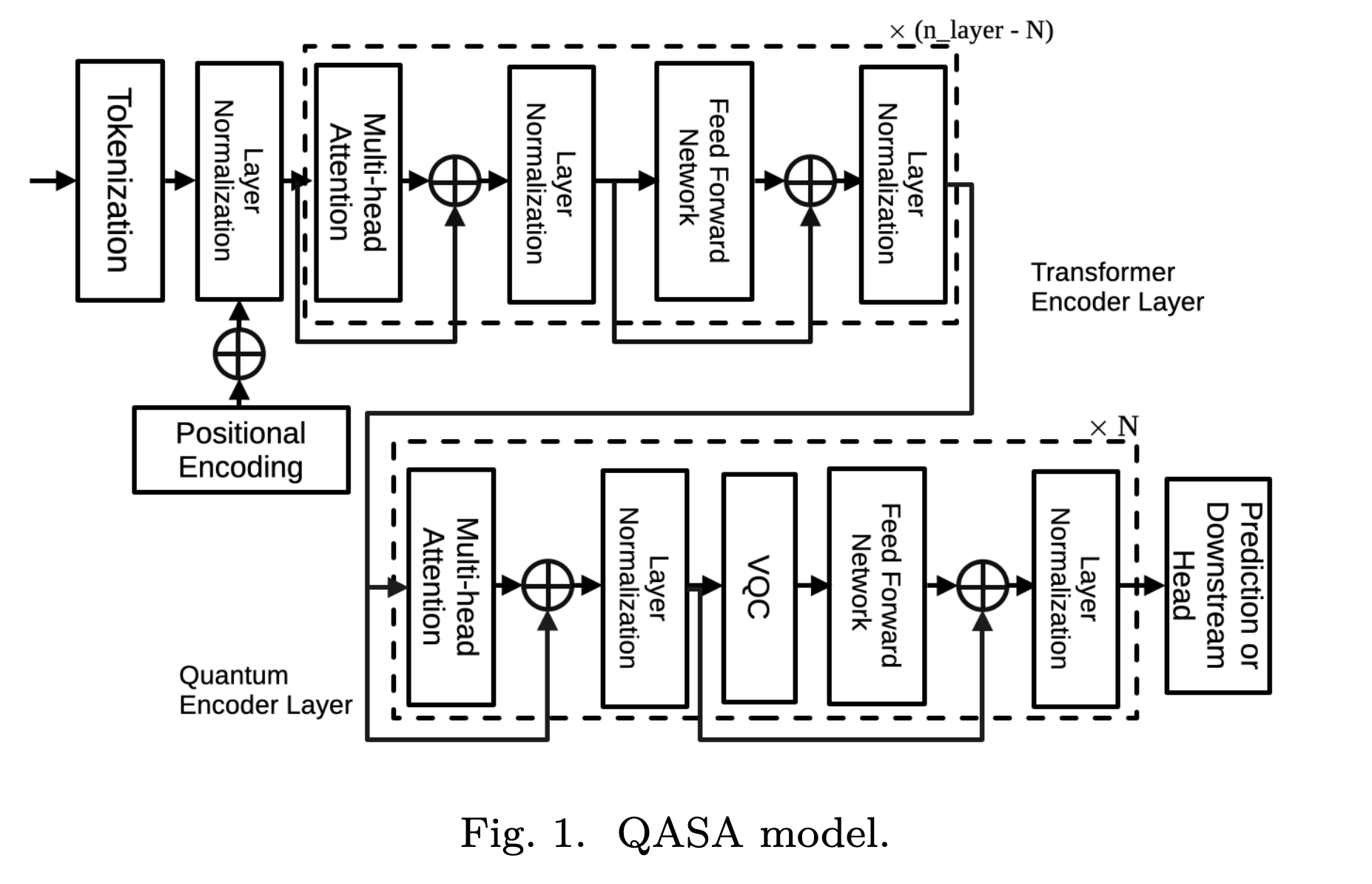

Designing hybrid quantum-classical architectures for time-series and sequential data,

including the Quantum Adaptive Self-Attention (QASA)

Computer Vision

Applying deep learning to visual recognition tasks, including surgical instrument detection for intraoperative safety,

and fine-grained food classification with foundation models such as Res-VMamba

Time-Series Classification & Forecasting

Developing interpretable and geometric deep learning methods for time-series analysis,

including FreqLens

Research Experience

- Built GCP-based MLOps pipeline, enabling training on 10K+ EEG samples.

- 2 research papers on quantum machine learning on EEG signal processing.

- 1 research paper on EEG-to-image using diffusion model.

- Focusing on nonstationary time series (like EEG) dynamics and its AI model applications.

- Deep learning applications on surgery automation with YOLO-based model.

- Developed a prototype for a surgical smoke evacuation system.

- Collaborated with researchers and clinicians to identify patterns and trends in brainwave activity related to major depressive disorder.

- Utilized machine learning algorithms to develop predictive models based on real-world brainwave data.

- Searching new possible unconventional superconductors among Co-based quaternary chalcogenides with diamond-like structure CuInCo₂A₄ / AgInCo₂A₄ (A = Te, Se, S).

Projects & Industry

- AI-driven DeFi liquidity positioning protocol with automated market maker (AMM) strategies and backtesting systems.

- Achieved 50%–120% base fee APR on WBTC/USDC and ETH/USDC pairs (Katana on SushiSwap) using time-series model-driven AMM strategies.

- Contributed to Reinforcement Learning from Human Feedback (RLHF) pipelines through high-complexity AI data labeling, preference rankings, and model-behavior assessments for instruction following, multimodal reasoning, and safety alignment.

- Microprocessor IP development for flagship 5G smartphones' display and AMBA SoC implementation.

- Hardware virtualization architecture RTL design and IP verification with UVM and SystemVerilog.

Education

- Advisor: Prof. Dr. Cheng-Ta Li, MD & Prof. Chung-Ping Chen

- Machine learning application on non-stationary time series data.

- The research EEG model for TMS pattern personalized prediction is currently being applied to real outpatient patients in the Precision Depression Intervention Center (PreDIC), Taipei Veterans General Hospital.

- Researching the oxide processing techniques. (Intern at Max Planck Institute)

- Researching the biomedical signal processing on traditional Chinese medicine data. (Advisor: Prof. Sheng-Chieh Huang)

Professional Service

Conference Reviewer

ICML 2026, KDD 2026, MICCAI 2026, ICASSP 2026

Journal Reviewer (2025–present)

- IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- IEEE Transactions on Neural Systems & Rehabilitation Engineering (TNSRE)

- IEEE Transactions on Consumer Electronics

- IEEE Transactions on Audio, Speech, and Language Processing (TASLP)

- IEEE Transactions on Cognitive and Developmental Systems (TCDS)

- IEEE Access

- npj Quantum Information

- EPJ Quantum Technology

- Quantitative Finance and Economics

- AI, Computer Science and Robotics Technology

- Biomedical Physics & Engineering Express

- Engineering Research Express

- International Journal of Machine Learning and Cybernetics

Invited Talks & Presentations

Awards & Achievements

- 2023: Certificate of the 20th National Innovation Award, clinical research category.

- 2019: International Blockchain Olympiad (IBCOL): World Finalist competition (100+ teams).

- 2017: Microsoft International Imagine Cup: 2nd place in Taiwan.

- 2016: International Genetically Engineered Machine Competition (iGEM): World Gold medal, Best Applied Design, Best Part Collection, Best Presentation (300+ university teams).